Machine Learning Evasion Attacks: How Adversaries Trick AI Models

Introduction

Five years ago, this blog might have been about cryptocurrencies or blockchain, the “next big thing” at the time. Fast forward to 2025, and the spotlight has shifted. Crypto has faded from the headlines, and now machine learning and AI are the stars of the show. This shift isn’t just a passing trend; it’s changing the marketplace across industries. But for the first time, it’s reshaping software engineering in a fundamental way.

Senior engineers who harness AI and machine learning are seeing their productivity skyrocket, producing outputs at 10x the usual rate. This isn’t just about efficiency; it’s about economics. As these tools become standard, the market will adjust. Senior engineers might find their value decreasing as the tools level the playing field, while junior engineers could struggle to find a foothold in a world where AI bridges experience gaps.

But the influence of machine learning doesn’t stop at tech. These models are becoming the silent workhorses in industries far removed from Silicon Valley, including construction, accounting, and more. They’re behind facial recognition systems, autonomous vehicles, and advanced cybersecurity protocols. Yet, for all their power, these models have an Achilles’ heel: they can be tricked.

In this post, we’ll explore Machine Learning evasion attacks, a class of adversarial techniques designed to fool even the most refined models.

At their core, evasion attacks exploit the delicate balance between a model’s precision and its ability to generalize. Sound technical? Let me make it simple.

They say a picture is worth a thousand words, and this one says it all. To you and me, the sign clearly says 35 mph. But computers don’t see the world like we do. Imagine a self-driving Tesla encountering this sign. Instead of recognizing the speed limit as 35, it might read it as 85 mph and adjust its speed accordingly. Terrifying, right? This is the essence of an evasion attack, subtle changes, invisible to humans, that cause models to misinterpret data in potentially dangerous ways.

These tiny tweaks, or perturbations, can lead to catastrophic misclassifications. They expose the hidden flaws in the algorithms we trust.

We’ll dive into different types of evasion attacks:

- White box attacks, where attackers have full access to the model’s internals

- Black box attacks, where only the final decision is visible

- Gray box attacks, where attackers might see probability scores or partial outputs

- Transfer-based attacks, where techniques used to fool one model can be applied to another

By the end of this post, you’ll understand how evasion attacks work and why they matter. Recognizing these vulnerabilities is the first step toward building more secure, resilient systems in a world increasingly powered by machine learning.

Background: Understanding Machine Learning Models

Before I start rambling about adversarial attacks, let’s cover a high-level overview of our target: a machine learning model. At its core, a model is a mathematical function, a complex algorithm refined through experience, that maps inputs to outputs. These models are designed to identify patterns in data by learning from vast amounts of examples. During the training process, the model adjusts its internal parameters to minimize errors and improve prediction accuracy, whether it is classifying images, recognizing speech, or forecasting trends.

This process transforms raw data into a set of learned features, enabling the model to make informed decisions. However, the same intricacy that gives these models their power can also expose vulnerabilities. Adversarial attacks exploit subtle weaknesses in the mapping process, introducing carefully crafted inputs that can mislead the model into making incorrect predictions.

What Is a Model?

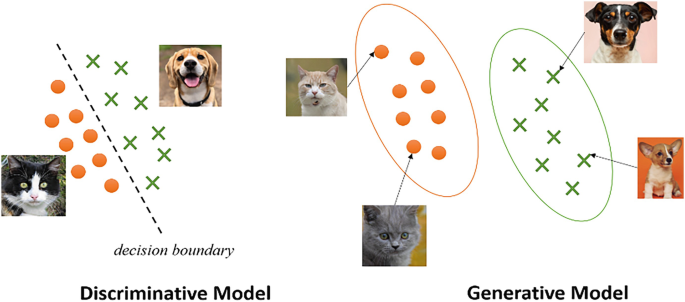

A machine learning model is not magic; it’s a systematic transformation of data into predictions. There are various types of models:

- Classifiers: These models sort data into distinct categories, like determining whether an image depicts a cat or a dog.

- Regressors: Instead of discrete categories, these models predict continuous values, such as estimating the price of a house based on its features.

No matter the type, every model operates under a simple principle: learn from data. During training, a model sifts through a vast trove of examples, gradually adjusting its internal parameters to reduce errors and improve accuracy. The journey from raw data to refined prediction is one of continuous refinement, where the model learns to navigate the often murky waters between overfitting and underfitting.

How Do Models Work?

At the heart of model training lies optimization. Picture standing atop a vast, undulating landscape where every point represents a unique configuration of the model’s parameters. The model’s objective is to navigate this terrain and find the lowest valley, the point where the difference between its predictions and the actual outcomes, known as the loss, is minimized. This journey is guided by optimization techniques like gradient descent.

This process is similar to steering a ship through unpredictable waters. Even the slightest change in direction, much like a minor parameter adjustment, can dramatically alter the course. The model’s decision boundary, the invisible line that separates one prediction from another, is shaped by these parameters. However, in high-dimensional spaces, these boundaries are not rigid walls but fragile, almost imperceptible membranes. It is along these thin, delicate lines that adversarial attacks exploit weaknesses.

Adversarial Examples

Adversarial examples are, at their core, a paradox. Inputs that look virtually identical to the human eye can cause a machine learning model to stumble in its predictions. These subtle manipulations, crafted with mathematical precision, exploit the fragile decision boundaries that models draw in high-dimensional spaces.

To fully understand how these vulnerabilities are exploited, we need to dive into the paradigms of attack and associated threat models.

Levels of Model Access

Attack strategies are primarily defined by how much an attacker knows about the model:

- White-Box Attacks: The attacker has full access to the model’s architecture, weights, and gradients.

- Gray-Box Attacks: The attacker has partial access, often seeing output probabilities but not internal mechanics.

- Black-Box Attacks: The attacker only knows the final decision (e.g., “yes” or “no”) without insight into how it was reached.

Targeted vs. Non-Targeted Attacks

Beyond the level of access, the intent of the attack further differentiates these strategies:

- Targeted Attacks: The attacker wants a specific incorrect output (e.g., making a “stop” sign appear as a “speed limit” sign).

- Non-Targeted Attacks: The goal is simply to cause an incorrect classification, regardless of the specific output.

Detailed Exploration of Evasion Attack Techniques

White-Box Attacks

White-box attacks grant an adversary complete visibility into a machine learning model’s architecture, weights, and gradients. This full access allows the attacker to craft adversarial examples that are both subtle and highly effective. In the following sections, we explore several common white-box attack techniques, examining their methodology, PyTorch implementations, and real-world implications.

Fast Gradient Sign Method (FGSM)

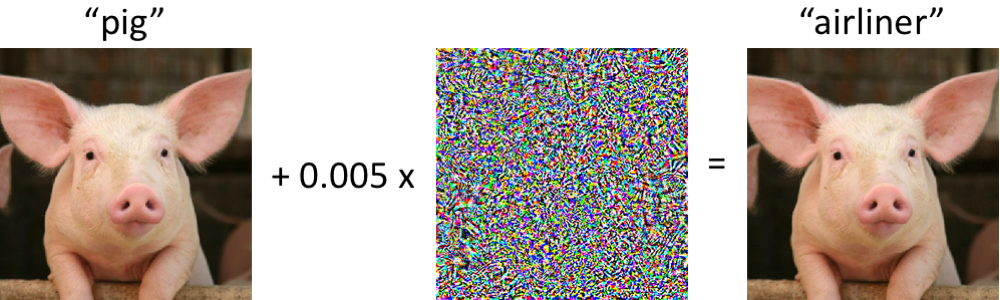

FGSM, introduced by Goodfellow et al. in 2015, is one of the earliest methods to generate adversarial examples. It leverages the gradient of the loss function with respect to the input data to determine the direction in which the input should be perturbed. Despite its simplicity, FGSM is powerful enough to fool models with minimal modifications.

How FGSM Works

- Compute the gradient of the loss with respect to the input.

- Take the sign of that gradient and apply a small perturbation scaled by ε (epsilon) in the direction that increases the loss.

FGSM Implementation in PyTorch

import torch

import torch.nn.functional as F

def fgsm_attack(model, image, label, epsilon):

"""Generates an adversarial example using FGSM."""

image.requires_grad = True # Enable gradient tracking for the input

output = model(image) # Forward pass

loss = F.nll_loss(output, label) # Compute loss

model.zero_grad() # Reset gradients

loss.backward() # Compute gradients

perturbation = epsilon * image.grad.sign() # Compute adversarial perturbation

adversarial_image = image + perturbation # Apply perturbation

adversarial_image = torch.clamp(adversarial_image, 0, 1) # Ensure valid pixel values

return adversarial_imageWhy FGSM is Effective

- Fast: Generates adversarial examples in a single step.

- Minimal distortion: Small changes can significantly impact classification.

- Widely transferable: Adversarial examples often remain effective across different models.

Real-World Example

Researchers applied FGSM to an image of a panda, resulting in an adversarial image that was misclassified as a gibbon with 99% confidence, even though the modifications were nearly imperceptible.

Projected Gradient Descent (PGD) Attack

PGD enhances the FGSM approach by applying the perturbation iteratively. Instead of one large step, PGD makes several small adjustments, ensuring the adversarial example stays within a defined perturbation boundary.

How PGD Works

- Start with an initial perturbation, often randomized.

- Iteratively apply small gradient-based updates and project the result back into the allowed perturbation space.

- Continue until the adversarial example reliably causes misclassification.

PGD Implementation in PyTorch

import torch.optim as optim

def pgd_attack(model, image, label, epsilon, alpha, num_iter):

"""Generates an adversarial example using PGD."""

perturbed_image = image.clone().detach().requires_grad_(True)

for _ in range(num_iter):

output = model(perturbed_image)

loss = F.nll_loss(output, label)

model.zero_grad()

loss.backward()

perturbation = alpha * perturbed_image.grad.sign() # Small step in gradient direction

perturbed_image = perturbed_image + perturbation # Apply perturbation

perturbed_image = torch.clamp(perturbed_image, image - epsilon, image + epsilon) # Project back

perturbed_image = torch.clamp(perturbed_image, 0, 1) # Keep within valid pixel range

perturbed_image = perturbed_image.detach().requires_grad_(True) # Reset for next iteration

return perturbed_image

Why PGD is More Powerful Than FGSM

- Iterative: Allows for finer adjustments and a more effective search for vulnerabilities.

- More resilient: Often bypasses defenses that mitigate single-step attacks.

- Customizable: Parameters like step size and iteration count offer flexibility in the attack’s strength.

Carlini & Wagner (C&W) Attack

The C&W attack is an optimization-based method designed to find the smallest possible perturbation that still forces a misclassification while remaining imperceptible.

How C&W Works

- The attack formulates the problem as finding the minimal change needed to trick the model, then uses iterative gradient descent (with optimizers like Adam) to refine the adversarial example.

- It incorporates a confidence parameter that balances subtle perturbations with a high likelihood of misclassification.

C&W Attack Implementation in PyTorch

import torch.optim as optim

def cw_attack(model, image, label, confidence=0, lr=0.01, max_iter=1000):

"""Generates an adversarial example using the Carlini & Wagner attack."""

perturbed_image = image.clone().detach().requires_grad_(True)

optimizer = optim.Adam([perturbed_image], lr=lr)

for _ in range(max_iter):

output = model(perturbed_image)

loss = -F.nll_loss(output, label) + confidence # Minimize perturbation while ensuring misclassification

model.zero_grad()

optimizer.zero_grad()

loss.backward()

optimizer.step() # Update the perturbation

return perturbed_image.detach()

Why C&W is Effective

- It finds the smallest necessary modifications, making the attack nearly undetectable.

- The iterative approach refines the perturbation until misclassification is achieved, even against robust defenses.

- Its use of a confidence parameter enables fine control over the trade-off between subtlety and certainty.

Real-World Example

The C&W attack has been successfully used to bypass commercial AI-powered malware detection systems. By adjusting only a few features of a malicious file, the attack led to misclassification, demonstrating serious vulnerabilities in security applications.

Jacobian-Based Saliency Map Attack (JSMA)

JSMA focuses on modifying only the most influential input features. By computing a saliency map, the attack selectively perturbs a small number of pixels, ensuring minimal overall alteration while achieving the desired misclassification.

How JSMA Works

- A saliency map is computed to identify the pixels that most strongly affect the model’s decision.

- Only these critical pixels are altered in small increments until the model’s prediction changes.

JSMA Implementation in PyTorch

import torch

def jsma_attack(model, image, target_label, num_features):

"""Generates an adversarial example using the Jacobian-based Saliency Map Attack (JSMA)."""

perturbed_image = image.clone().detach().requires_grad_(True)

for _ in range(num_features):

output = model(perturbed_image)

loss = -F.nll_loss(output, target_label)

model.zero_grad()

loss.backward()

saliency = perturbed_image.grad.abs()

max_saliency = torch.argmax(saliency)

perturbed_image.view(-1)[max_saliency] += 0.1 # Slightly modify the most influential pixel

return perturbed_image.detach()

Why JSMA is Effective

- Precision: It modifies only a few key pixels, reducing the chance of detection.

- Targeted: Can be tuned to force the model into predicting a specific class.

- Efficient: Requires fewer changes compared to full gradient attacks.

Key Takeaways

- Full Access Advantage: White-box attacks utilize complete knowledge of a model to craft precise adversarial examples.

- Diverse Techniques: FGSM, PGD, C&W, and JSMA each offer unique advantages, balancing speed, subtlety, and robustness.

- Defense Implications: The variety and effectiveness of these methods underscore the importance of developing multi-layered defenses for AI systems.

- Real-World Impact: These attacks have demonstrated vulnerabilities in both commercial and research settings, emphasizing the need for continuous improvement in AI security.

Gray-Box Attacks

Gray-box attacks strike a balance between having complete model knowledge and relying solely on output observations. They typically estimate gradients or use probabilistic feedback, allowing attackers to craft adversarial examples even when direct gradient information is unavailable.

Zeroth-Order Optimization (ZOO) Attack

ZOO is a powerful, gradient-free technique that approximates the model’s gradients by probing its output with subtly perturbed inputs. By using finite difference methods, ZOO infers the necessary gradient directions without needing internal model details.

How ZOO Works

- Begin with an initial input sample and apply small random perturbations; observe the resulting changes in output probabilities to form a finite difference estimate.

- Compute the gradient approximation using the difference quotient, effectively turning output variations into a directional signal.

- Iteratively update the input based on the estimated gradients, carefully adjusting the step size to stay within permissible perturbation bounds.

Real-World Example In a notable demonstration, attackers targeted cloud-based image classifiers like the Google Cloud Vision API. By strategically querying the system and estimating gradients from subtle shifts in confidence scores, they managed to manipulate the input until the system misidentified objects, highlighting the vulnerability even in systems with limited internal access.

Natural Evolutionary Strategies (NES) Attack

NES leverages principles from natural evolution to optimize adversarial inputs without direct gradient access. Instead of calculating precise gradients, NES relies on sampling and selection to iteratively enhance the adversarial perturbation.

How NES Works

- Sample a population of small perturbations from a Gaussian distribution around the current input and evaluate how each affects the model’s confidence in the true class.

- Select the most promising perturbations based on their impact, and combine or refine them using evolutionary strategies to gradually steer the input toward misclassification.

Real-World Example NES-based attacks have been deployed against fraud detection systems. In one case, attackers subtly modified transaction details, using iterative sampling to decrease the fraud probability until the transactions were misclassified as legitimate. This approach underscored the threat posed by query-efficient, evolution-inspired methods in high-stakes financial systems.

Square Attack: A Query-Efficient Gray-Box Attack

Square Attack adopts a localized approach by targeting small square patches within an image. This method reduces the number of queries needed by focusing on altering only key regions rather than the entire input.

How Square Attack Works

- Randomly select a square patch from the image using uniform sampling over potential patch locations.

- Apply a structured perturbation to the selected patch,typically a normalized noise scaled by a factor (ε), and observe the change in the model’s confidence.

- Iteratively optimize both the patch’s location and the perturbation intensity, refining the changes until the model’s predicted class shifts while keeping modifications within the adversarial budget.

Real-World Example Square Attack has been used to compromise robust classifiers in high-resolution image recognition tasks. By modifying only small, localized patches, attackers managed to bypass defenses such as JPEG compression and input smoothing, proving that even hardened models can be vulnerable to targeted, query-efficient attacks.

Black-Box Attacks

Black-box attacks are among the most practical and widely applicable adversarial techniques. Unlike white-box attacks, where an attacker has full access to a model’s internals, or gray-box attacks, where partial information (like probability scores) is available, black-box attacks operate under complete opacity, the attacker only sees the final output (e.g., a classification label).

Even with these limitations, attackers have developed powerful query-based and transfer-based methods that can successfully fool AI models. Let’s explore some of the most effective black-box attack strategies.

The HopSkipJump Attack: A Decision-Based Approach

Some black-box systems return only a final classification label, rendering probability scores or gradients inaccessible. HopSkipJump is designed for this exact scenario.

Key Characteristics:

- Query-efficient: It minimizes the number of queries needed to generate an adversarial example.

- Gradient-free: Rather than approximating gradients directly, it refines adversarial inputs through decision boundary exploration.

How HopSkipJump Works:

- Start with a misclassified input (for instance, an image labeled “cat” when it should be “dog”).

- Iteratively adjust the input in small increments to locate the nearest point on the decision boundary.

- Refine the perturbation to maintain adversarial status while using minimal queries.

Code Example: HopSkipJump in PyTorch

import torch

def hopskipjump_attack(model, image, target_label, num_iter=50, step_size=0.01):

"""Black-box attack using decision boundary exploration."""

perturbed_image = image.clone()

for _ in range(num_iter):

# Generate random perturbation direction

direction = torch.randn_like(image).sign()

# Test the perturbation

test_image = perturbed_image + step_size * direction

if model(test_image).argmax() == target_label:

perturbed_image = test_image # Keep the adversarial example

return perturbed_image.detach()

Real-World Example: Fooling Cloud AI Models

Researchers have employed HopSkipJump to attack cloud-based machine learning APIs like Google Cloud Vision API. By submitting repeated queries with slightly altered images, they demonstrated that even without internal model details, attackers could force misclassification. In one experiment, an AI-powered fraud detection system was deceived by iteratively modifying transaction details until fraudulent behavior was misclassified as legitimate.

The Boundary Attack: Adversarial Optimization with No Prior Knowledge

When you have absolutely no access to gradients, scores, or decision boundaries, the Boundary Attack offers a solution. This attack uses a random-walk method to refine a starting adversarial example.

How Boundary Attack Works:

- Start with a fully misclassified input rather than a clean one.

- Gradually move the adversarial example closer to the original input while preserving the misclassification.

- Terminate the process when the perturbation is minimized to the smallest possible change.

Code Example: Boundary Attack in PyTorch

import torch

def boundary_attack(model, image, target_class, step_size=0.01, num_iter=100):

"""Boundary Attack: Starts with an adversarial example and refines it."""

perturbed_image = torch.randn_like(image) # Start with random noise

for _ in range(num_iter):

perturbed_image = perturbed_image + step_size * (image - perturbed_image)

output = model(perturbed_image)

if output.argmax() == target_class:

break # Stop when the target misclassification is achieved

return perturbed_image.detach()

Case Study: Fooling Tesla’s Autopilot with Stickers

In one of the most famous real-world black-box attacks, researchers tricked Tesla’s self-driving AI by placing small stickers on road signs. For example:

- A 35 mph speed limit sign was misclassified as 85 mph after subtle physical perturbations.

- The attack was entirely black-box, as the researchers had no access to Tesla’s proprietary model.

- This experiment demonstrates that even physical adversarial examples can bypass state-of-the-art models, posing a significant risk to autonomous vehicles, security cameras, and biometric systems.

The One-Pixel Attack: Minimal Perturbation, Maximum Impact

The One-Pixel Attack illustrates that even minimal changes can have a drastic impact on a model’s output. By modifying only one or a few pixels, this method achieves surprising attack success rates.

Why It Works:

- Genetic Algorithm-based: It evolves perturbations using mutation and selection, mimicking natural evolution.

- Minimal Change: Only a single or a few pixels are altered, making detection extremely challenging.

- High Success Rate: Despite its simplicity, the attack often succeeds in misclassifying images.

Code Example: One-Pixel Attack in PyTorch

import torch

import random

def one_pixel_attack(model, image, label, num_iterations=100):

"""One-Pixel Attack: Modifies a single pixel to fool the classifier."""

perturbed_image = image.clone()

for _ in range(num_iterations):

x, y = random.randint(0, image.shape[2] - 1), random.randint(0, image.shape[3] - 1)

perturbed_image[:, :, x, y] = torch.rand(1) # Change one pixel

if model(perturbed_image).argmax() != label:

break # Stop when misclassification occurs

return perturbed_image.detach()

Applications in the Real World:

One-Pixel Attacks have been used to:

- Misclassify objects in image recognition systems (e.g., a panda becomes a gibbon).

- Compromise facial recognition security by causing identity confusion.

- Break CAPTCHA systems through strategically modified pixels.

Transfer-Based Attacks

Transfer-based attacks exploit a fascinating vulnerability in deep learning models,adversarial examples often transfer from one model to another. This enables attackers to train a surrogate (substitute) model, craft adversarial inputs using white-box techniques, and then deploy them against an entirely different model, all without directly interacting with it.

How Transfer-Based Attacks Work:

1️⃣ Train a Substitute Model:

- The attacker collects input samples and may query the target model for labels.

- If querying is not possible, a model is trained on a similar dataset to mimic the target’s behavior.

2️⃣ Generate Adversarial Examples:

- Techniques like FGSM, PGD, or C&W are used on the substitute model to craft adversarial examples.

3️⃣ Deploy Against the Target Model:

- The adversarial examples are then applied to the target model, often causing misclassification due to similar decision boundaries across models.

The Substitute Model Attack

One of the seminal transfer-based attacks was introduced by Papernot et al. (2017). The attacker trains a substitute model by querying the target model and using its outputs to build a surrogate. Adversarial inputs generated on the substitute can then be successfully applied to the target.

Code Example: Training a Substitute Model in PyTorch

import torch

import torch.nn as nn

import torch.optim as optim

def train_substitute_model(target_model, train_loader, num_epochs=5):

"""Trains a substitute model by querying the target model for labels."""

substitute_model = nn.Sequential(

nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Flatten(),

nn.Linear(16 * 32 * 32, 10) # Assuming CIFAR-10

)

optimizer = optim.Adam(substitute_model.parameters(), lr=0.001)

loss_fn = nn.CrossEntropyLoss()

for epoch in range(num_epochs):

for images, _ in train_loader:

labels = target_model(images).argmax(dim=1) # Querying the target model for labels

optimizer.zero_grad()

outputs = substitute_model(images)

loss = loss_fn(outputs, labels)

loss.backward()

optimizer.step()

return substitute_model # Trained to imitate the target model

Why This Works:

- Adversarial examples generated on a well-trained substitute model often achieve over 80% success rates when transferred to the target.

- This method effectively bypasses restrictions on direct querying of the victim model.

Momentum Iterative FGSM (MI-FGSM)

One drawback of early transfer attacks was the overfitting of adversarial examples to the substitute model. Dong et al. (2018) introduced MI-FGSM to mitigate this by incorporating momentum into gradient updates, making the perturbations more transferable.

How MI-FGSM Works:

- Gradients are accumulated using a momentum term to stabilize updates.

- The method iteratively applies these stabilized gradients to craft perturbations.

- This reduces overfitting to the substitute model, enhancing transferability across models.

Code Example: MI-FGSM Attack in PyTorch

import torch

def mi_fgsm_attack(model, image, label, epsilon, alpha, num_iter, decay_factor=1.0):

"""Momentum Iterative FGSM (MI-FGSM) attack for transferability."""

perturbed_image = image.clone().detach().requires_grad_(True)

momentum = torch.zeros_like(image)

for _ in range(num_iter):

output = model(perturbed_image)

loss = torch.nn.functional.cross_entropy(output, label)

model.zero_grad()

loss.backward()

grad = perturbed_image.grad.data

momentum = decay_factor * momentum + grad / torch.norm(grad, p=1) # Apply momentum

perturbation = alpha * momentum.sign() # Compute adversarial perturbation

perturbed_image = perturbed_image + perturbation

perturbed_image = torch.clamp(perturbed_image, image - epsilon, image + epsilon) # Keep within bounds

perturbed_image = perturbed_image.detach().requires_grad_(True) # Reset for next step

return perturbed_image

Why MI-FGSM is Effective:

- Generates adversarial examples that generalize well across different models.

- Reduces overfitting to the substitute, increasing the likelihood of transfer.

- Has shown high success rates in attacking cloud-based AI models where the target is unknown.

Ensemble Attacks: Maximizing Transferability

For even higher success rates, attackers can use ensemble attacks. Instead of relying on a single substitute model, adversarial examples are crafted to fool multiple models simultaneously.

How Ensemble Attacks Work:

- Combine predictions from several substitute models to generate a unified adversarial perturbation.

- This approach leverages the common decision boundaries shared by many models.

- The resulting adversarial examples are more robust and likely to fool an unseen target model.

Code Example: Ensemble Attack in PyTorch

def ensemble_attack(models, image, label, epsilon):

"""Generates an adversarial example that transfers across multiple models."""

perturbed_image = image.clone().detach().requires_grad_(True)

for model in models: # Loop through multiple substitute models

output = model(perturbed_image)

loss = torch.nn.functional.cross_entropy(output, label)

model.zero_grad()

loss.backward()

perturbation = epsilon * perturbed_image.grad.sign()

perturbed_image = perturbed_image + perturbation

perturbed_image = torch.clamp(perturbed_image, 0, 1) # Ensure valid pixel values

return perturbed_image.detach()

Real-World Case Study: Adversarial Patches

Researchers have taken transfer-based attacks into the physical realm. One famous demonstration involved adversarial patches,visual patterns that, when applied to objects, fool AI systems. For example:

- The Adversarial T-Shirt (2020): A team designed a T-shirt using MI-FGSM and ensemble techniques that caused person detection models to fail.

- The same patch transferred successfully across multiple detectors, proving that transfer-based adversarial examples are not limited to the digital domain.

- Such attacks pose risks for surveillance systems, autonomous vehicles, and security applications.

Final Thoughts: The Danger of Transfer-Based Attacks

- Zero-query Potential: Attackers can bypass API limits by training their own surrogate models.

- High Practicality: Transfer attacks work even when target models differ architecturally.

- Robustness Against Defenses: Common defenses like input filtering and defensive distillation often fail against well-crafted transfer-based adversarial examples.

Conclusion

Machine learning models are powerful but vulnerable. Adversarial attacks exploit their weaknesses, fooling models in ways imperceptible to humans. Whether through white-box, black-box, or transfer-based methods, attackers have developed sophisticated ways to deceive AI systems.

Understanding these attacks is the first step toward building more resilient models. With techniques like adversarial training, defensive distillation, and input preprocessing, we can work toward more robust AI systems.

AI security is not just an academic concern,it affects self-driving cars, facial recognition, finance, and even healthcare. As adversarial techniques evolve, so must our defenses. The next generation of AI engineers must think like attackers to build systems that can withstand them.